Scraping Using Python

We are going to use Beautifulsoup and other libraries to scrap Wikipedia:

- List of Countries: On accessing the first page, we’ll extract the list of countries, their population and percentage of world population.

- Country: We’ll then access each country’s page, and get information including total area, percentage water, and GDP (nominal).

Import Libraries¶

- Numpy: To be use with arrays.

- Pandas: To convert the data in a tabular structure so we can manipulate it.

- Urllib: To open the url from which we would like to extract the data.

- BeautifulSoup: This library helps us to get the HTML structure of the page that we want to work with. We can then, use its functions to access specific elements and extract relevant information.

Understanding the data¶

Getting the "raw" data¶

First we will need to get the "raw" data, the HTML content of the specific URL, for this we will create a function that will return this "raw" data.

The Function getHTMLContent() will receive a link or URL, later we are going to use urlopen() to open this URL, tis will enable us to apply Beautifulsoup library.

There are different parsers, for XML and HTML, but in this case, we will use just html.parser

and we return the output of this parser, Beautifulsoup(markup,'html.parser'), so we can extract our data.

Finding the data and display it with prettify()¶

we get the content of the URL but the information we need is in a table

so we are going to store the information we got in a variable, later we will apply the method find_all() from Beautifulsoup and the tag table so we can get all the tables in this HTML, later we are going to print it in a readable way using the method prettify()

The code will print all the table in this HTML, therefore we will need to check which is the table that we need, but first let see the code so far:

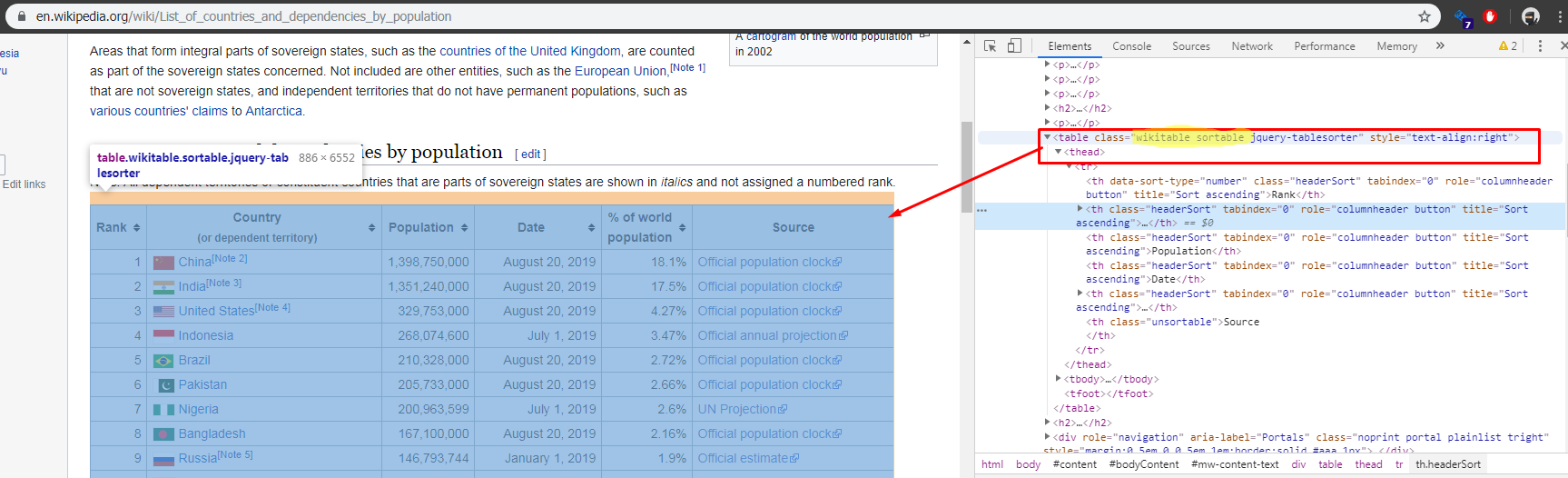

Now we need one table in specific

so we will need to find this table with the class wikitable sortable,

We will use the method find(), since this method allow us no just find a tag but a tag with a specific tag, once we have the table we will get all the rows

now we have all the rows, we need to iterate over them to find the cell that contain the link to the country page> we know that HTML table are detonated with <tr></tr> an each row is either be heading <th></th> or data <td></td> and we know that the country page is in the second column so cells[1] and we use find() to find the a elements, and we extract the link or href with get():

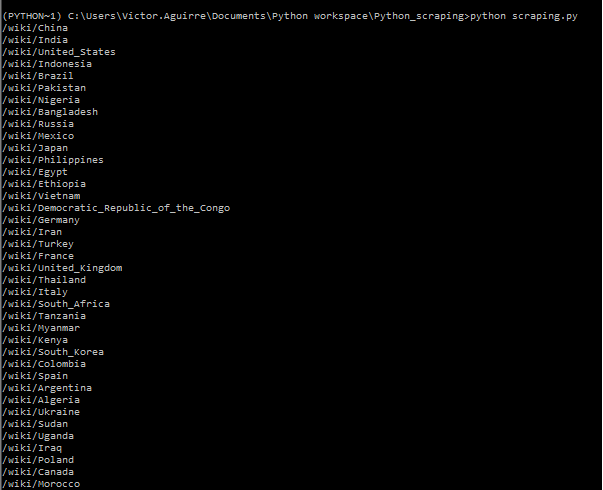

and the result

We can see that the information or links that we got back don't include the first part of the URL so we will need to prefix "https://en.wikipedia.org".

Later we will create a variable called

root_URLthat will contain "https://en.wikipedia.org"

Data for Each country¶

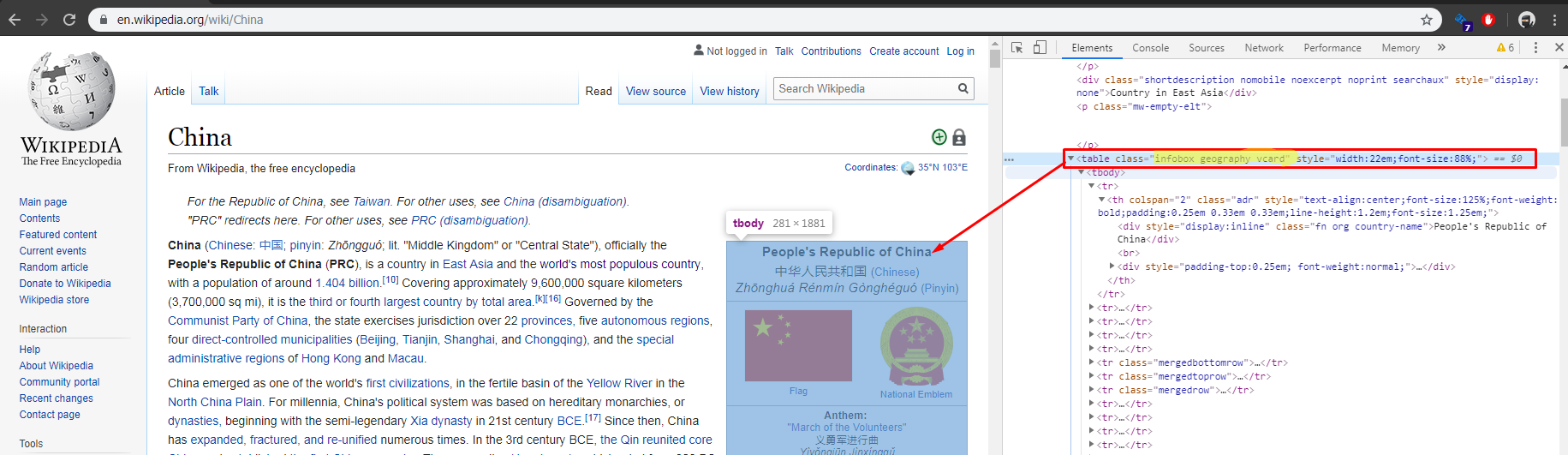

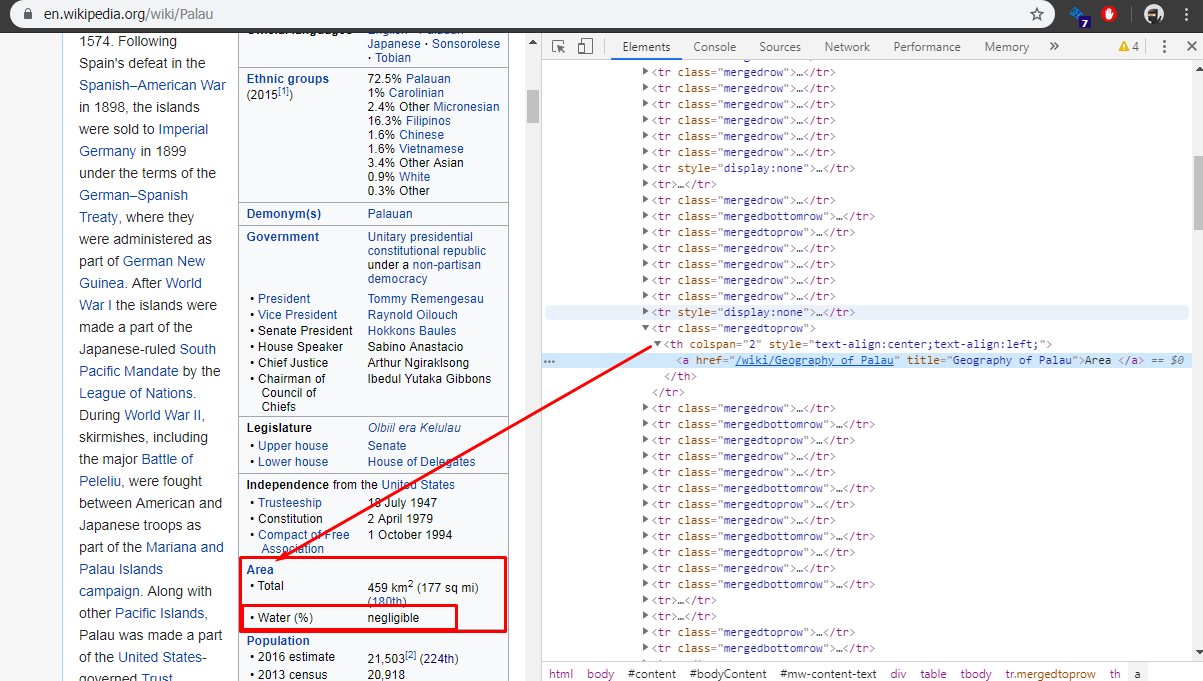

Now, we Will use the list of link to go to each country page and locate the card to the right of the screen where there is the remaining information.

inspecting the country page we found that the class for card mentioned above is infobox geography vcard

Here is when we run into some issues, we are looking for the following fields:

- Area > Total area

- Water (%)

- GDP (nominal) > Total

- Per capita

but their order vary in each country page, so we will need to make some adjustment.

Modifying current code¶

We will start making a small modification in the code, we will create a new function that will return a list with the link of all the counties

Values in different positions - Something to thing about it¶

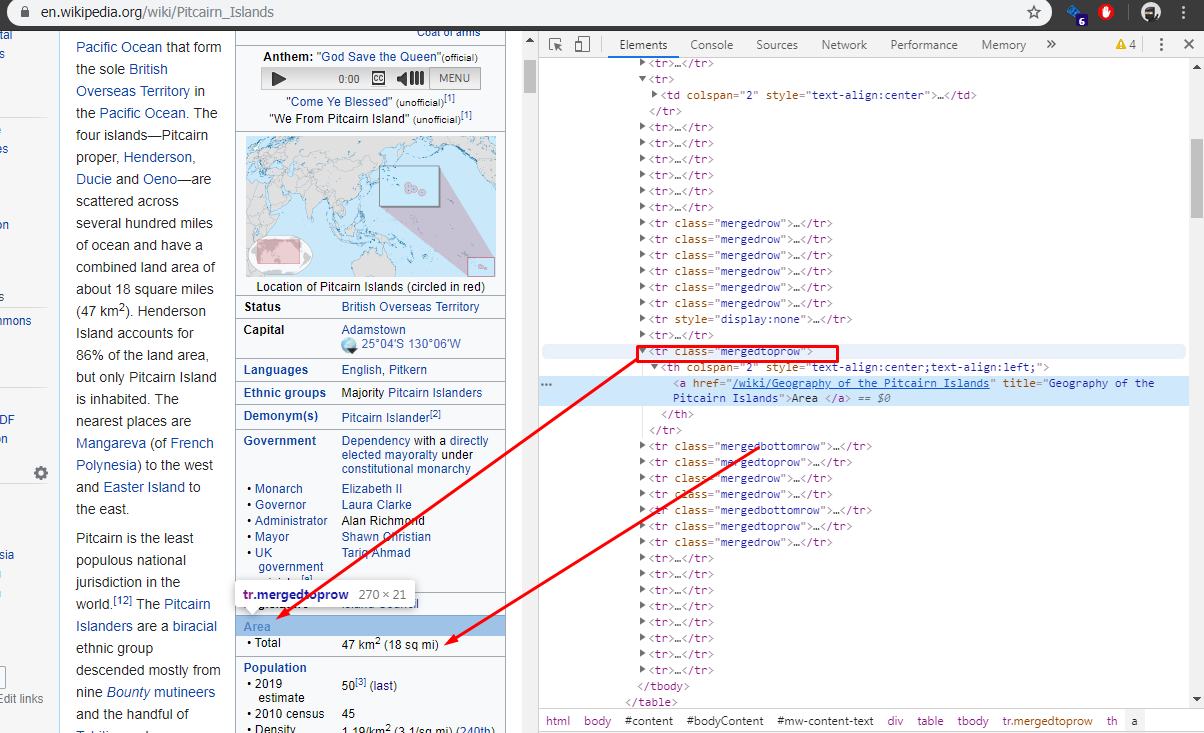

Now, with few pages inspected we can see where will be a problem, the location of the different values we want are in different position depending of the country.

We now the information is in tags with classes mergedrow or mergedrowbottom but the position of the row that contain the information it is not constant, in the first example, we see that we don't have water(%) but in the second page we do have this value, making the position of population one position down in comparison with the first example

To solve this we will add some extra code. We will start by adding a new variable called additional_details, later use it to append the information we collect form each country page to the table with the list of countries. Next, we will create a function getadditionalDetails() and a variable flag read_content, we will explain the usage later.

we are going to use three type of function here:

get()With this function we will find and get the reference of a particular element.get_text()the function will return the text that is within the opening and close tags of an element.strip()This will remove the white spaces at both sides of the string or text.

getadditionalDetails() function:¶

First, we will create a new variable

next, the function

We have a try/except block that will wrap the logic and it will prevent the script to crash if one of the links doesn't provide the information in the same format or if the page is empty

-

We get the links for the countries, remember those links don't have the root url so here we concatenate them with the root URL. We find the table with the classes

infobox geography vcard, and set the flagread_content. -

We use a

forloop to iterate over all the<tr>tags on the table. -

We use a decision block to check if the class in the

<tr>ismergedtoprowandnot read_content. if the condition are match we save the content of<a>on the variablelink. -

the next two condition blocks are checking if the information on the page is following the order that we want, in which case we set the

read_contentto True , otherwiseread_contentwill be set as False. -

the

elifpart of the initial conditional will be the one that will populate the variableadditional_details -

Finally the

exceptblock, this will be used to report back if there is any issue.