Introduction to computer vision¶

so in the previous notes we use the numpy array as a way to provide the arrays for training the model, although in a real scenario, hard coding the data won't be possible, in the following example we are going to use a data set call fashion-mnist and since this is a data set with 60.000 example for training and 10.000 examples for testing we will need to load the data in a different way.

Importing fashion-mnist using keras¶

Fortunately, it's still quite simple because Fashion-MNIST is available as a data set with an API call in TensorFlow. We simply declare an object of type MNIST loading it from the Keras database.

We simply declare an object of type MNIST loading it from the Keras database. On this object, if we call the load data method, it will return four lists to us. That's the training data, the training labels, the testing data, and the testing labels.

Input Shape¶

Here you saw how the data can be loaded into Python data structures that make it easy to train a neural network.

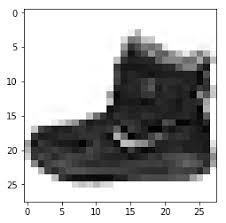

The image is represented as a 28x28 array of greyscales, and how its label is a number. Using a number is a first step in avoiding bias -- instead of labelling it with words in a specific language and excluding people who don’t speak that language!

Labels¶

Each training and test example is assigned to one of the following labels:

| Label | Description |

|---|---|

| 0 | T-shirt/top |

| 1 | Trouser |

| 2 | Pullover |

| 3 | Dress |

| 4 | Coat |

| 5 | Sandal |

| 6 | Shirt |

| 7 | Sneaker |

| 8 | Bag |

| 9 | Ankle boot |

About the layers of the model¶

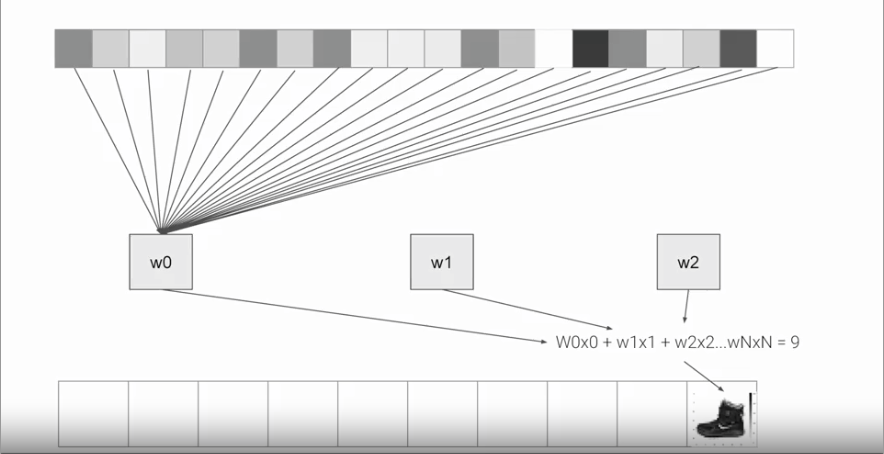

we will look at the code for the neural network definition. Previously, we have just one layer, now we have three layers, it is important to look at are the first and the last layers.

The last layer has 10 neurons in it because we have ten classes of clothing in the dataset. They should always match. The first layer is a flatten layer with the input shaping 28 by 28, this is because the images are 28X28, so we're specifying that this is the shape that we should expect the data to be in. Flatten takes this 28 by 28 square and turns it into a simple linear array.

The interesting stuff happens in the middle layer, sometimes also called a hidden layer. This is a 128 neurons in it, we can think these neurons as variables in a function. Maybe call them x1, x2 x3, etc.

if you then say the function was y equals w1 times x1, plus w2 times x2, plus w3 times x3, all the way up to a w128 times x128 (\(y = w_1x_1 +w_2x_2+w_3x_3+...+w_{128}x_{128}\)). By figuring out the values of w, then y will be 9, which is the category of the shoe.

We can check the exercise in the colab A Computer Vision Example

An Example of a script¶

A full script will look like:

Explanation of some Keywords¶

-

Sequential: That defines a SEQUENCE of layers in the neural network

-

Flatten: Remember earlier where our images were a square, when you printed them out? Flatten just takes that square and turns it into a 1 dimensional set.

-

Dense: Adds a layer of neurons

Each layer of neurons need an activation function to tell them what to do. There's lots of options, but just use these for now.

-

Relu effectively means "If X>0 return X, else return 0" -- so what it does it it only passes values 0 or greater to the next layer in the network.

-

Softmax takes a set of values, and effectively picks the biggest one, so, for example, if the output of the last layer looks like [0.1, 0.1, 0.05, 0.1, 9.5, 0.1, 0.05, 0.05, 0.05], it saves you from fishing through it looking for the biggest value, and turns it into [0,0,0,0,1,0,0,0,0] -- The goal is to save a lot of coding!

Callback to stop the training¶

first we will need to create the class myCallback

Instantiate the myCallback class

now we can make changes in the fit function to add the callback

so the completed script will be:

Now here ans example with the other data set, the original MNIST that contain handwritten numbers from 0 to 9